Sharing AI computational power with time-sharing scheduling to elastically run hyperscale deep learning training

Hedge funds that use AI for investing

AI Basic Science Research

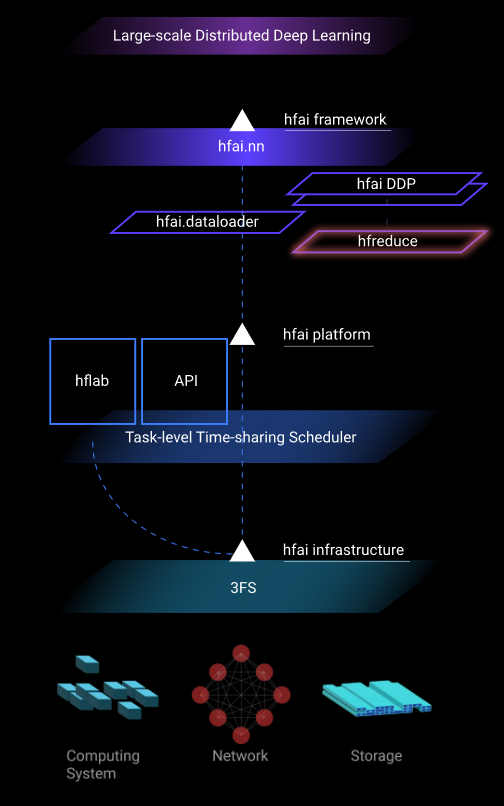

“Firefly II” takes "task-level time-sharing" as its core concept, and the scheduling system responds in seconds, so that every researcher can have a smooth training experience. Meanwhile, the platform is equipped with powerful software layer support: high-performance arithmetic library (hfai.nn), distributed training communication framework (hfreduce), and high-capacity and high-bandwidth file system (3FS) specially designed for AI development, so that the AI model can be expanded to multiple nodes for massively parallel training, and experience the ultimate performance.

NOI/ACM Gold Team Continuously Optimizes Core Operators LSTM Operator Faster 20%-6x Attention Operator Faster 30%

Optimized allreduce solution for Firefly II's custom hardware

Good communication capabilities without specialized hardware

BERT-Large 20% training speedup at 100 nodes

Self-developed distributed parallel file system

Squeezing physical high-speed network bandwidth to explore performance boundaries

IO response: 1.8 billion times/second

Read and write bandwidth: 7.0 TB/sec.

8.0 TB/s 读

500 GB/s 写

Data based on cluster utilization in February 2022